Twitch, the livestreaming service that largely caters to gamers, has exploded in popularity since being acquired by Amazon in 2014—but toxicity on the platform has also increased. This week, Twitch took an important step toward getting a handle on its applause-like "chat" feature, and it goes beyond the usual dictionary-based approach of flagging inappropriate or abusive language.

The Tuesday rollout of a new "ban evasion" flag came with a surprising amount of fanfare, and it puts Twitch in a position to do what many other platforms won't. The company is not only paying attention to "sockpuppet" account generation; it is pledging to squash it.

Spinning up attacks

Pretty much any modern online platform faces the same issue: Users can join, view, and comment on content with little more than an email address. If you want to say nasty things about Ars Technica across the Internet, for example, you could make a ton of new accounts on various sites in a matter of minutes. Your veritable anti-Ars mini-mob requires little more than a series of free email addresses. Should a service require some form of two-factor authentication, you could simply attach spare physical devices or spin up additional phone numbers.

In less hypothetical terms, Twitch creators have dealt with this "hate mob" problem for some time now, with the issue peaking in intensity after Twitch added an "LGBTQIA+" category. Abusive users charged up hyperfocused lasers of hateful speech, usually directed at smaller creators who could be discovered in Twitch's category directory. As I explained in September:

While Twitch includes built-in tools to block or flag messages that trigger a dictionary full of vulgar and hateful terms, many of the biggest hate-mob perpetrators have turned to their own dictionary-combing tools.

These tools allow perpetrators to evade basic moderation tools because they construct words using non-Latin characters—and can generate thousands of facsimiles of notorious slurs by mixing and matching characters, thus looking close enough to the original word. Their power for hate and bigotry explodes thanks to context that turns arguably innocent words into targeted insults, depending on the marginalized group they're aimed at.

Battling these attacks on a dictionary-scanning level isn't so cut and dried, however. As any social media user will tell you, context matters—especially as language evolves and as harassers and abusers co-opt seemingly innocent phrases to target and malign marginalized communities.

Server-side suspicions

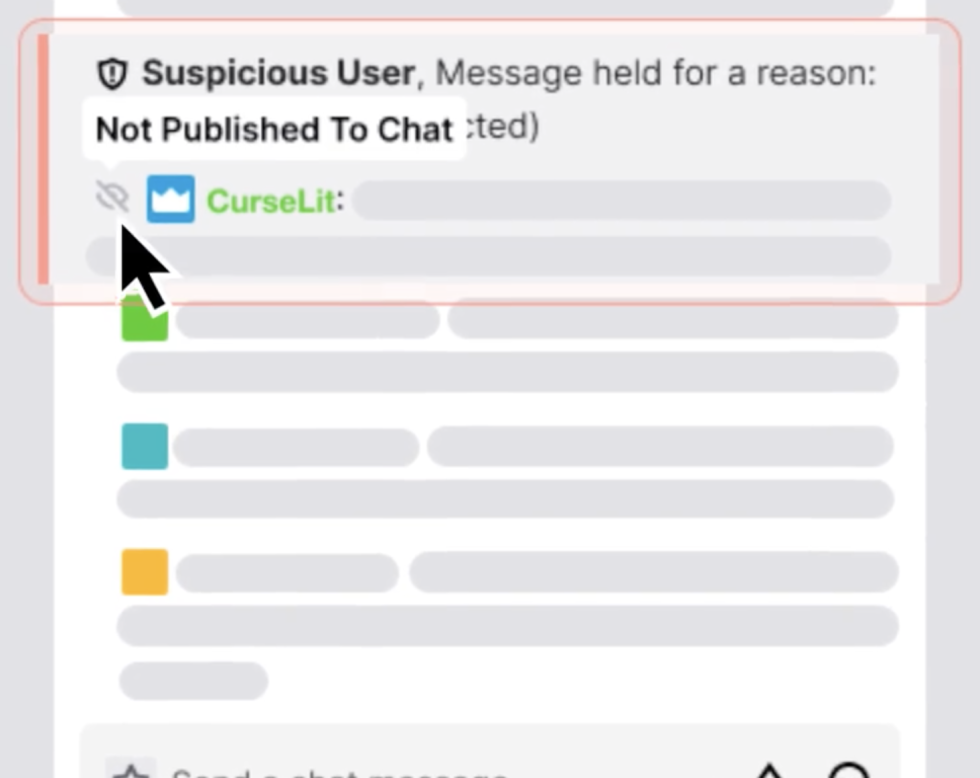

Twitch's "ban evasion" flag takes a different approach. It uses Twitch's server-side information about account generation to determine whether a newly generated account appears to have come from someone who broke the site's terms of service and then created another account. If the account is flagged, the user in question won't notice anything different, but streamers and their moderators will see that user's messages land in a special "ban evasion" moderation silo. This practice is generally known as "shadowbanning" since messages appear to work just fine for the banned person, but nobody else in the chat feed sees them.

If the account was moderated in error or the streamer doesn't see any issue with the flagged user's messages, the flagged user can be unbanned and brought back into public chat. Otherwise, the channel can either kick/ban the flagged user or leave the account shadowbanned. (Twitch also offers a milder version of this flag that leaves the user's chatting abilities untouched but gives moderators a heads-up that an account seems suspicious.)

The move appears to split the difference between immediately shutting down suspicious accounts and letting them roam free and unchecked across Twitch's hills and valleys. And it follows a new optional toggle, which launched in late September, for individual channels. Hosts can choose to restrict chat features to users who have verified their phone number and/or been live on Twitch for a certain amount of time.

Twitch is essentially admitting to something that "engagement"-obsessed social media sites are loath to confront: Fake accounts exist and they can poison whatever platforms they're created on. This idea tends to run counter to the metrics that site operators love to show off when seeking advertising partners; social media sites would rather not add asterisks to any counts of "monthly active users" when determining advertising rates.

More to learn about machine learning

Still, by coming out and saying to users that "bad actors often choose to create new accounts, jump back into chat, and continue their abusive behavior," Twitch has drawn a line in the sand. The company has gone on record—in a way that other heavily scrutinized social media platforms haven't—in confirming that the issue exists on its platform.That means Twitch has officially invited accountability and scrutiny on the issue going forward. It's also a clever method to target problematic behavior on a site that supports languages and regions all over the world. Still, in order for ban-evasion flags to appear, accounts must actually get called out or banned—and that means Twitch's vague pledge of "develop[ing] more tools to prevent hate, harassment, and ban evasion" will probably require a lot of additional work, moderation, and supervision.

Twitch's Tuesday announcement includes a vague description of using "machine learning" to assess whether an account is "suspicious," though the platform doesn't clarify what data sets it is polling to make a determination. Is Twitch looking for specific IP addresses? Traces of VPN usage? Incognito browsing sessions? Cookies that a careless harasser failed to clear? Specific chat phrases used within the first few hours of the account's creation? It's unclear what massive data sets Twitch is scanning to home in on inauthentic accounts.

As a response to our questions about this system, a Twitch spokesperson offered the following statement: "The tool is powered by a machine learning model that takes a number of signals into account—including, but not limited to, the user's behavior and account characteristics—and compares that data against accounts previously banned from a Creator's channel to assess the likelihood the account is evading a previous channel-level ban."

"tactic" - Google News

December 02, 2021 at 05:42AM

https://ift.tt/31f6og9

Twitch’s “ban evasion” flag is a bigger anti-hate tactic than you might think - Ars Technica

"tactic" - Google News

https://ift.tt/2NLbO9d

Shoes Man Tutorial

Pos News Update

Meme Update

Korean Entertainment News

Japan News Update

Bagikan Berita Ini

0 Response to "Twitch’s “ban evasion” flag is a bigger anti-hate tactic than you might think - Ars Technica"

Post a Comment